Auctions Insights

Designing a self-serve troubleshooting tool for Connected TV ad auctions

Role

Lead Product Designer

Team

Staff PM, 3 Engineers

Timeline

MVP GA: November 2025

Main Dashboard (Phase 2): In progress, Q1 2026

Overview

Publica is a Connected TV ad server that helps publishers manage and maximize the value of their ad inventory by running unified auctions. These auctions automatically determine which ad wins based on price, brand safety, and viewer data.

Auctions Insights is a diagnostic feature that brings transparency into the auction process - helping Ad Ops teams quickly understand why ads aren’t serving and what actions to take next.

The Problem

Ad auctions are complex and opaque.

When an ad doesn’t serve, users currently:

Lack visibility into where the auction failed

Don’t know why bids were dropped or rejected

Often rely on internal ops teams to troubleshoot

Lose valuable time and sometimes revenue before issues can be fixed

Core Problem: We don’t have an easy way to understand why an ad is not serving.

This leaves users without actionable next steps and creates friction between publishers, internal ops teams and clients.

Discovery & Research

“We need to find out the why.”

Because this project was design-led, I invested heavily in discovery before proposing solutions.

What I did

Internal interviews: Spoke with Publisher Ops teams who interface with clients daily

How do they troubleshoot today?

How often do clients ask for help?

What data do they investigate?

What’s slow or painful about the current process?

Workflow analysis: Observed how users run queries manually, often time-consuming and repetitive

Competitive analysis: Evaluated tools and competitors

What insights do they provide?

Where do they fall short?

How can we differentiate?

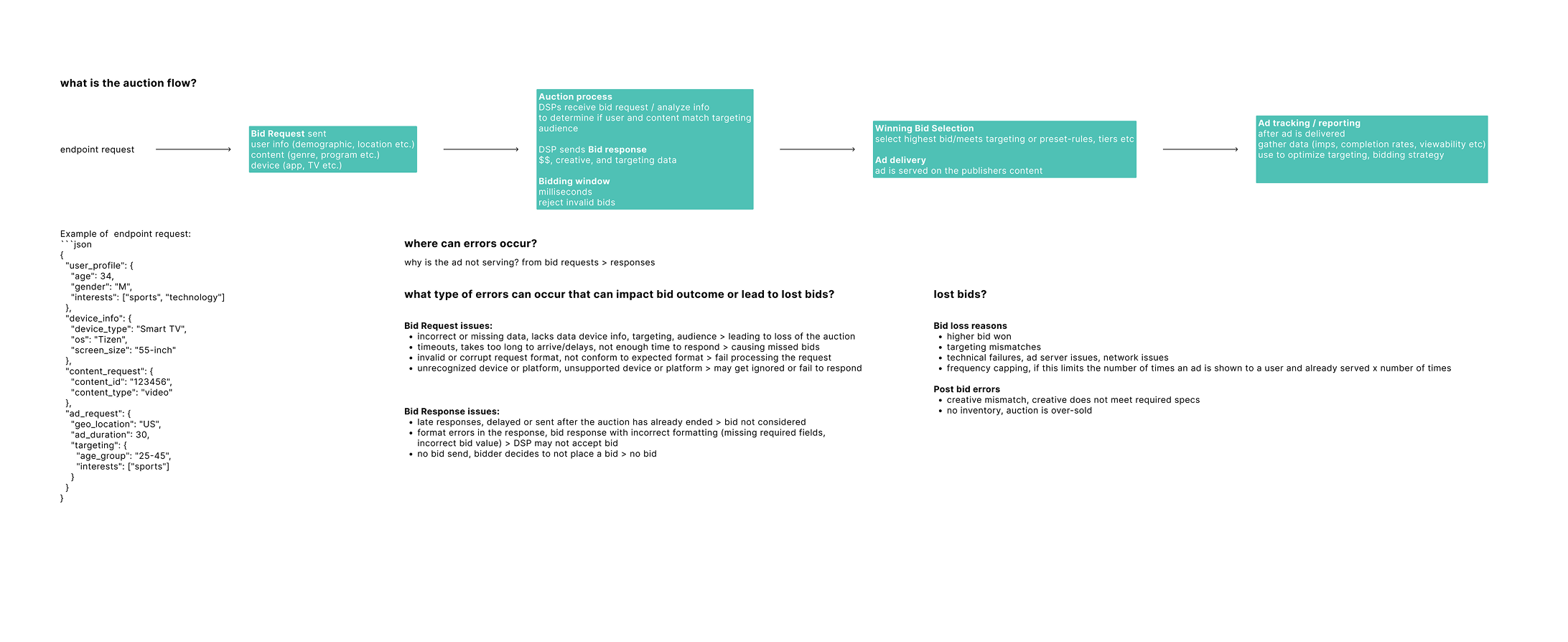

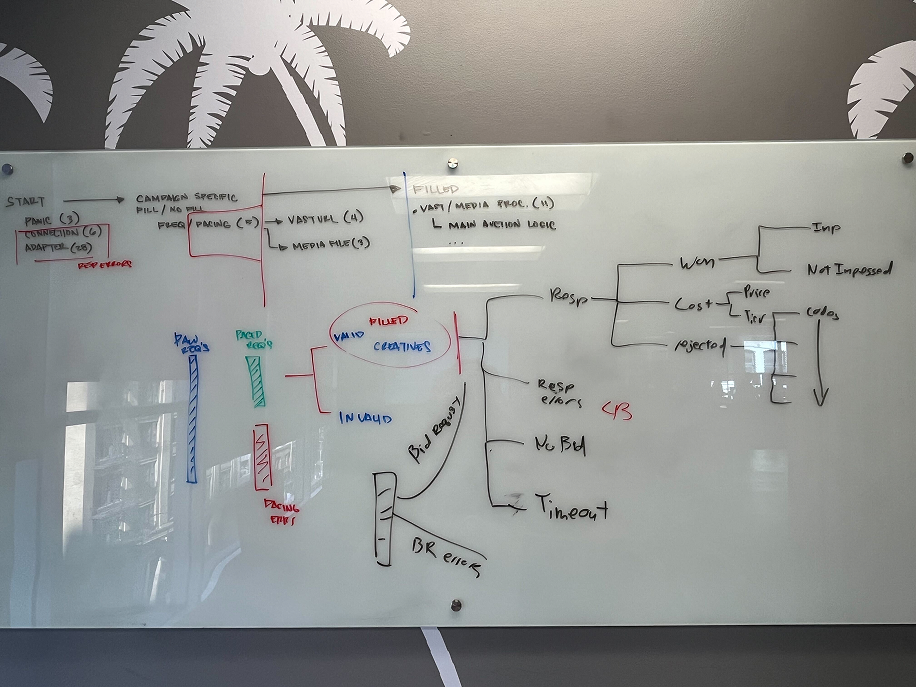

Domain deep dive: Learned the full auction flow (endpoint request > bid request > auction > bid validation > outcome)

I mapped

Where errors occur

Which errors impact bid outcomes

What data we currently store

What could realistically be visualized

Pinning the Problem

Through research a clear gap emerged:

Users need to troubleshoot errors quickly

Users need visibility into auction “waste”

Users need a visual overview to understand issues at a glance

Key User Stories:

As a user, I need to find errors quickly so I can troubleshoot efficiently.

As a user, I need to understand where bids are lost so I can take action.

As a user, I want a visual representation of errors to quickly grasp what’s happening.

Project Goals

Goal 1: Visibility

Create an intuitive troubleshooting experience using new data visualizations

Introduce a Sankey chart to visualize auction flow and drop-off points

Close a competitive feature gap

Goal 2: Self-Service

Enable users to take action directly from the data

Reduce dependency on internal ops teams

Improve serviceability and client retention

Address direct user feedback

Goal 3: Business Impact

Drive optimization and revenue for publishers 💰

Tie insights to actionable improvements

Align design decisions with product success metrics

Framing the work around these goals helped me pitch and align the idea with Product and Engineering.

Ideation & Alignment

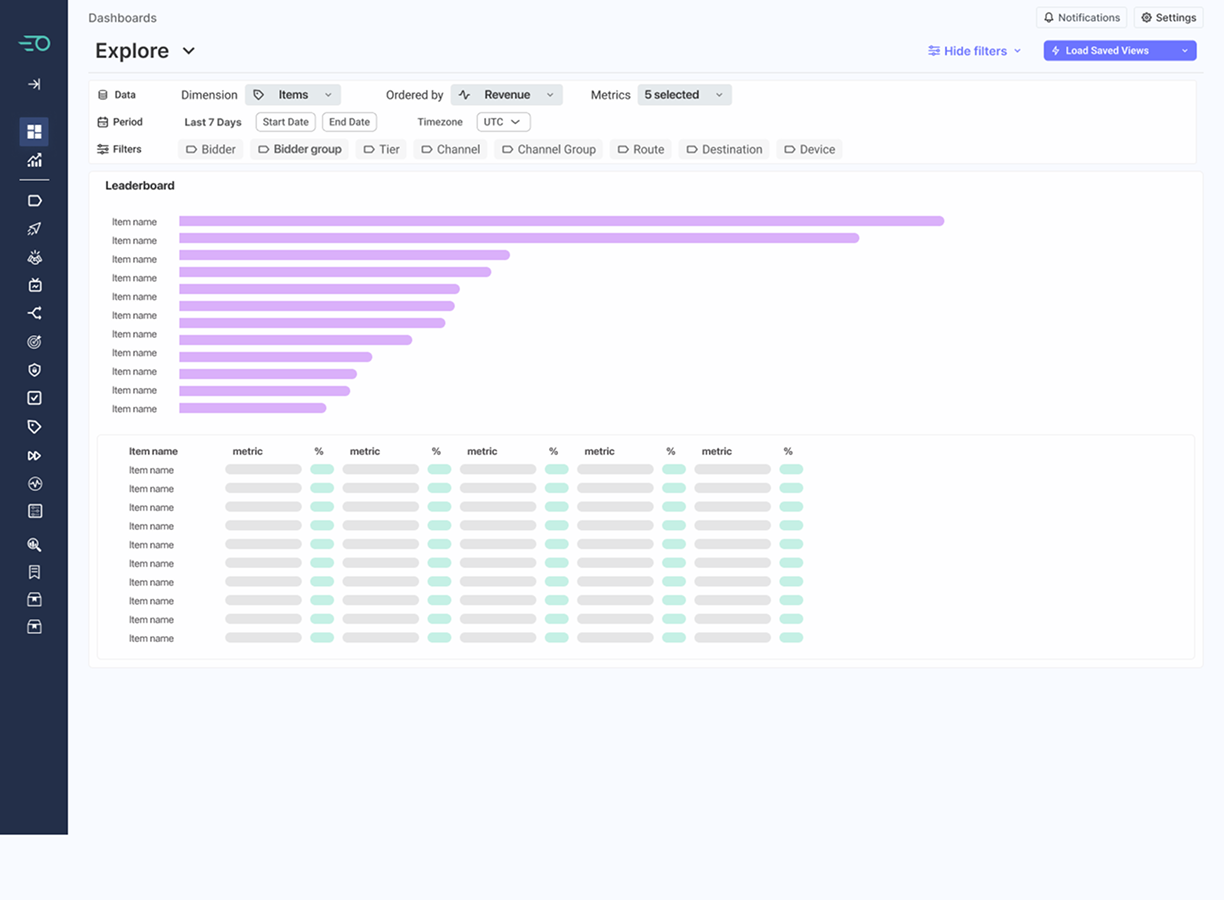

I explored solutions through low- to mid-fidelity designs, focusing on:

Making complex auction data understandable

Balancing depth with usability

Collaboration & Buy-In

Socialized designs with PM and internal users

Reviewed technical feasibility with Engineering leads

Iterated with my manager’s feedback

Formally presented to PMs to align on data flow and aggregation constraints

This phase involved a lot of back-and-forth, especially around what data we could realistically surface.

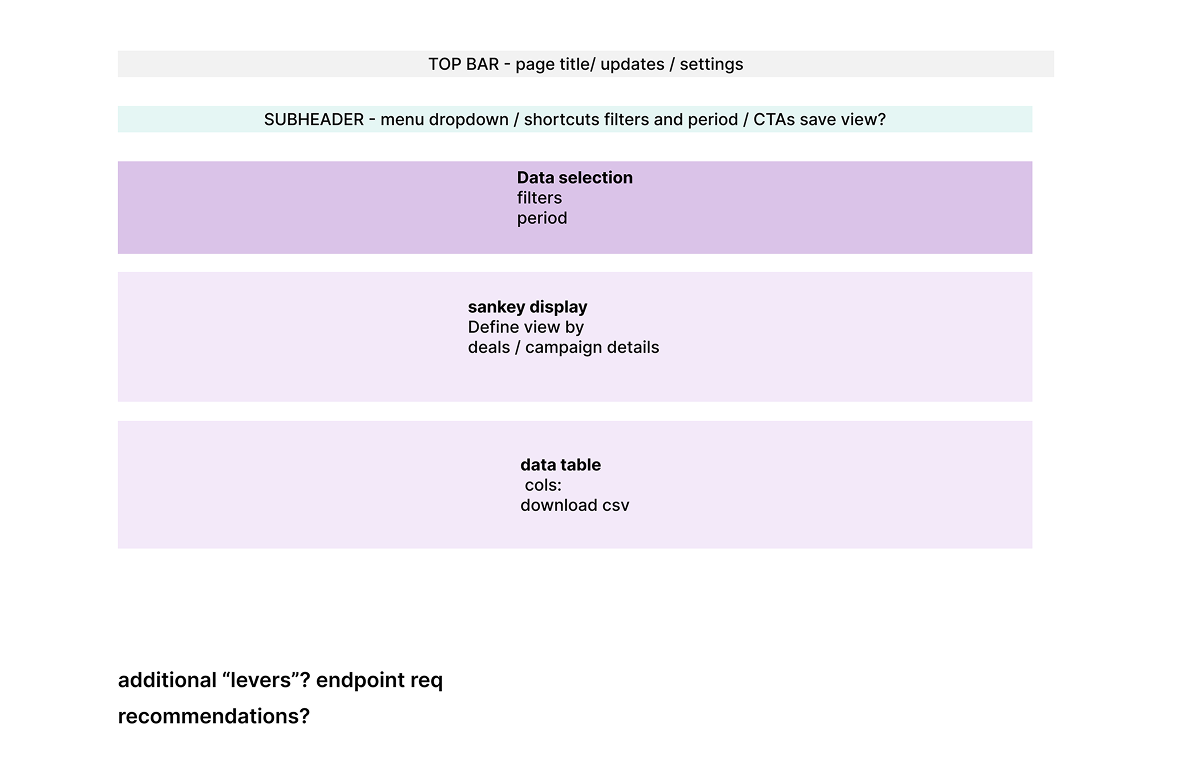

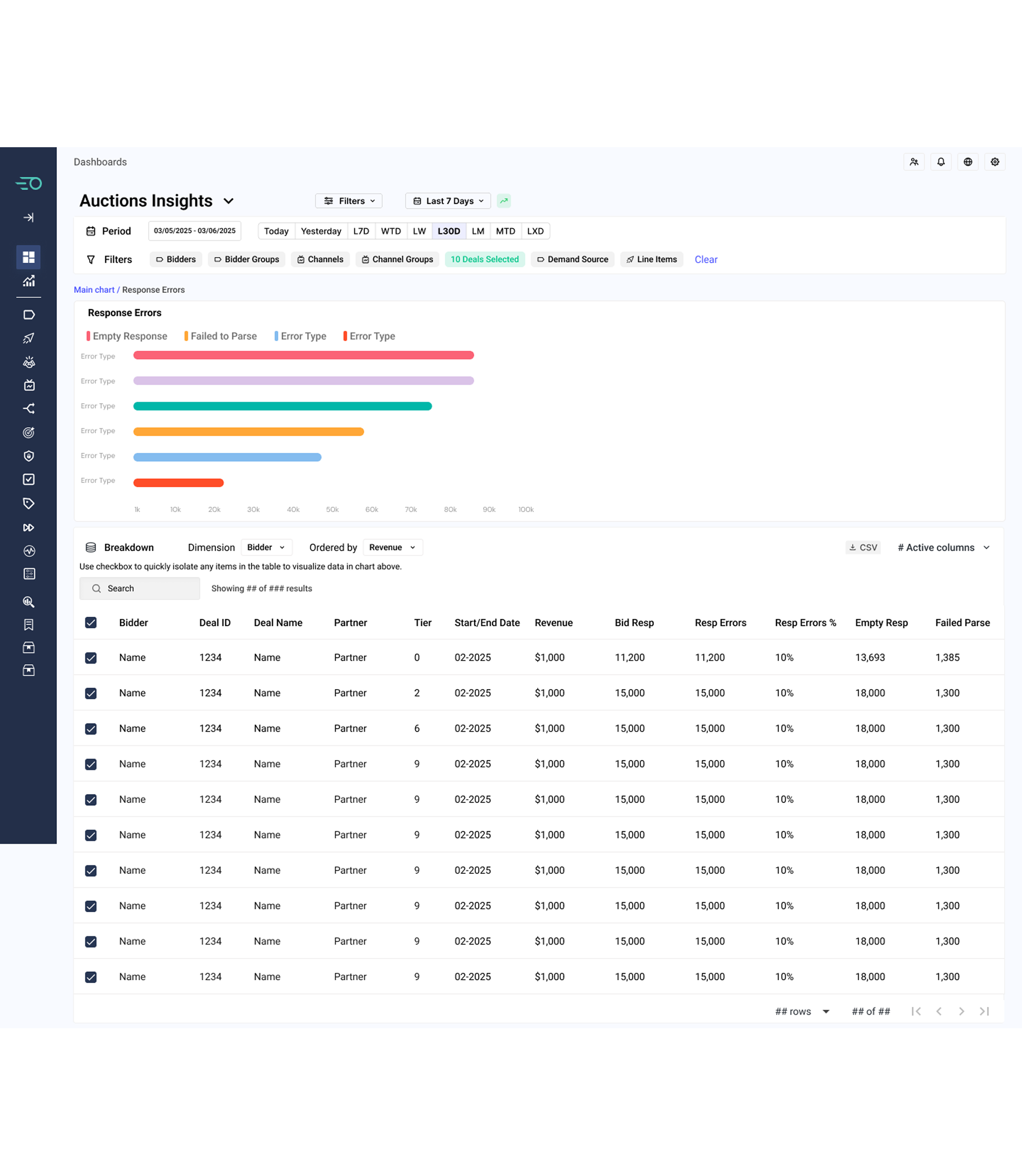

Initial Solution Concept

Auctions Insights within the Main Dashboard

User-defined time range

Filters for specific data sets

High-level visualization of auction flow

Tabular breakdown below for detailed analysis

Ability to drill into specific error types (e.g. response errors)

Challenges

1. Scope

The initial vision was too large for an MVP. Engineering was overwhelmed by the amount of work required.

2. Data Availability

Not all auction data was currently stored in a way that supported a full Sankey visualization. We needed time to populate and validate the data.

3. Feedback Loops

Building the full dashboard upfront would delay feedback from real users.

Key Turning Point: Start Small

We hit a critical question early:

How do we build something useful while starting small enough to validate quickly?

I led a whiteboarding session with Engineering to define:

Must-have vs. nice-to-have

A phased delivery plan

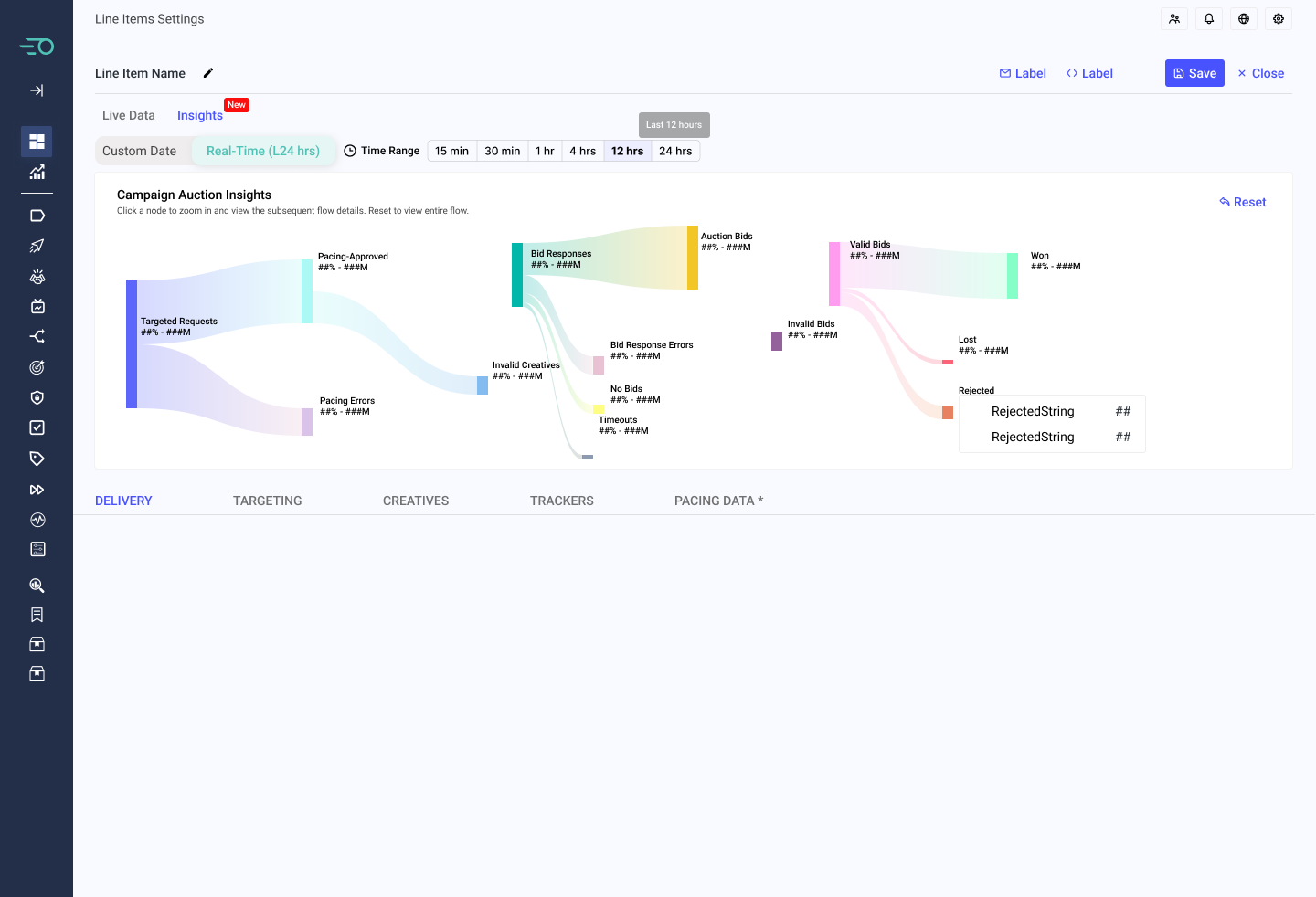

Phase 1 Strategy

We agreed to start with line-item (single campaign) insights:

Faster to build

Easier to validate data accuracy

Allows incremental internal feedback

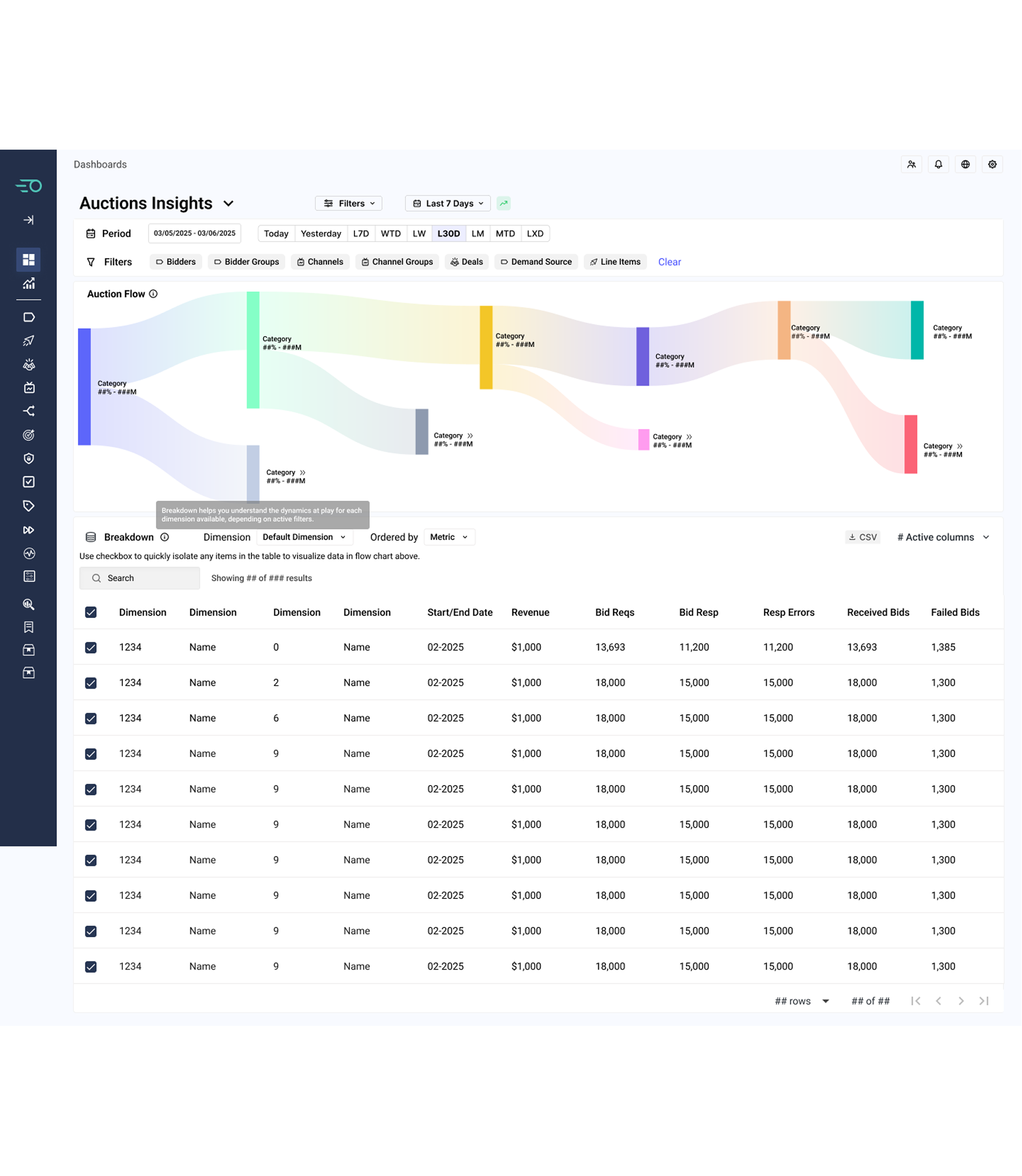

What Phase 1 Shows

Using real campaign data, we visualized the auction flow:

Targeting requests

Pacing approvals & errors

Bid responses

Auction bids

Invalid vs. valid bids

Won, lost, and rejected outcomes

Errors surfaced along the way

With stakeholder buy-in, I updated the designs accordingly.

MVP Launch

The MVP shipped to production in November 2024.

Early Feedback

“This feature is great! I’ve been using it to investigate Tegna’s pacing concerns.”

Impact:

Proactive, real-time diagnostic visibility

Confirmed strong demand for unified auction data

Established the foundation for the Q1 Main Dashboard

The launch included extensive QA, iteration, and collaboration especially around:

Real-time vs. historical views

Sankey accuracy and proportionality

Interaction limitations (zoom, scale, readability)

What’s Next

Refining Phase 1 insights

Expanding into the Main Auctions Insights Dashboard

Targeting GA release in Q1 2026

Learnings

Flexibility: Breaking a large vision into phased releases allowed us to ship faster without losing sight of the core problem.

Domain depth matters: A deep understanding of the auction flow helped me confidently define what was critical vs. supplementary.

Design advocacy: Research and user feedback enabled me to influence product direction and keep Publica competitive in the market.